Linear Regression with Python from scratch

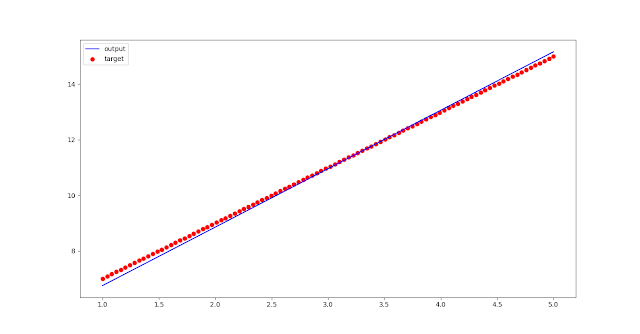

Linear Regression is to figure out how linear relation there is between parameters. The equation is very simple.

y = mX + b

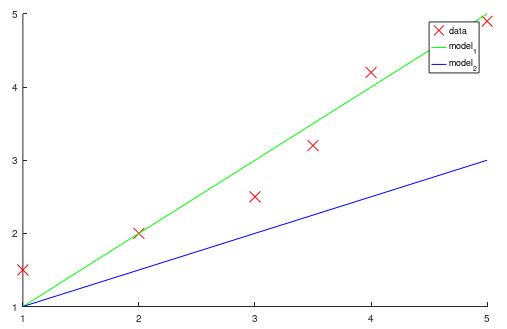

It is what I tried to catch using a single Adaline algorithm. With a single Adaline algorithm, I couldn't make a Linear equation with interrupt but without. Linear regression is quite similar to Adaline. So frankly, I don't understand why linear regression works on a linear equation with interrupt but Adaline.

# compare Adaline and Linear regression.

first of all, While Adaline is used to get a classification, Linear regression is used to find a relation between parameters. In spite of these differences, why I say those are similar is the algorithms use gradient descent to update a measure and a bias. Each algorithm uses a different cost function. Adaline, I used SSE (Sum of squared error) as a cost function. Linear regression used MSE (Mean squared error). The thing is there use the partial derivative to update a measure and bias. the shape of the partial derivative of SSE and MSE is quite similar.

the partial derivative of SSE is Sum of (target - output)*(-input) (Please refer to [1])

the partial derivative of MSE is Sum of (target - output)*(-input) divided by the number of columns (Please refer to [1])

The only thing that is different is to divide by N (the number of columns in data). It would make me confused for a while. That why I have to keep studying for a long time.

TODO

- Search and organize what makes a difference between Adaline and linear regression.

- Search and organize if each algorithm can be used with other cost functions.

git-hub

https://github.com/DavidKimDY/ML-Algorithm-from-scratch

reference

[1] https://ml-cheatsheet.readthedocs.io/en/latest/linear_regression.html#cost-function

[2] https://sebastianraschka.com/Articles/2015_singlelayer_neurons.html#gradient-descent

y = mX + b

It is what I tried to catch using a single Adaline algorithm. With a single Adaline algorithm, I couldn't make a Linear equation with interrupt but without. Linear regression is quite similar to Adaline. So frankly, I don't understand why linear regression works on a linear equation with interrupt but Adaline.

# compare Adaline and Linear regression.

first of all, While Adaline is used to get a classification, Linear regression is used to find a relation between parameters. In spite of these differences, why I say those are similar is the algorithms use gradient descent to update a measure and a bias. Each algorithm uses a different cost function. Adaline, I used SSE (Sum of squared error) as a cost function. Linear regression used MSE (Mean squared error). The thing is there use the partial derivative to update a measure and bias. the shape of the partial derivative of SSE and MSE is quite similar.

the partial derivative of SSE is Sum of (target - output)*(-input) (Please refer to [1])

the partial derivative of MSE is Sum of (target - output)*(-input) divided by the number of columns (Please refer to [1])

The only thing that is different is to divide by N (the number of columns in data). It would make me confused for a while. That why I have to keep studying for a long time.

TODO

- Search and organize what makes a difference between Adaline and linear regression.

- Search and organize if each algorithm can be used with other cost functions.

git-hub

https://github.com/DavidKimDY/ML-Algorithm-from-scratch

reference

[1] https://ml-cheatsheet.readthedocs.io/en/latest/linear_regression.html#cost-function

[2] https://sebastianraschka.com/Articles/2015_singlelayer_neurons.html#gradient-descent

Comments

Post a Comment